2. Fixed Point Weight Quantization Analysis

TL;DR (Abstract)

Here we summarize the article to provide a high-level overview. The modern ML field widely uses quantization to compress models and accelerate compute. How we actually quantize parameters centers around minimizing the quantization error. This error is not trivial to model, due to its dependence on the input signal. Our goal in this article is to explore a first-principles approach to derive the quantization error under a specific scheme (fixed point per-channel weight quantization). We model the input signal from its PDF, and use the observation that deep learning weights follow gaussian distributions, to simplify our model. Upon testing on Llama-7b's parameters, we find that a theoretical model using the gaussian assumption reasonably captures the quantization error distribution. Furthermore if the ratio of the quantization grid to distribution standard deviation (

Introduction

The basic procedure of quantization is pretty simple, but the ramifications of the process on a model's accuracy can be large and highly unpredictable. A good first pass we quantizers[1] use to see how lossy a quantization scheme can be is to measure the error between full-precision and low-precision parameters. The intuition being if the error is large, then the post-quantized model will probably do badly on the task at hand. At my job, I would go through many, many, distribution plots of these errors to glean some patterns. As I merrily swiped through these plots, two questions would consistently come to mind:

- Can we motivate the quantization error theoretically? In other words, can we model the error a priori without being handed a tensor of weights/activations first?

- What exactly is the relationship between the error and actual model accuracy? We know there is one but how do we quantify it?

Beyond just my curiosity, these questions have practical implications too. If we can model the propagation of quantization error well enough without expensive runs of the model, we can attain a sense of where the model gets hurt the most, and work to contain them. If we can find the relationship between quantization error and model accuracy loss, we'll have much deeper insights into how the model learns, and will likely open new doors for how we can efficiently model data.

Unfortunately, question 2 is really challenging to answer, with various research groups approaching this from different directions[2]. Thankfully, question 1 feels contained enough to tackle head on. To make life as simple as possible, let's focus first on fixed point (aka integer) quantization of a pre-trained model, where quantization bins are uniformly separated[3].

The structure of this blog breaks down into two parts: theory and experiment. The theory is taken entirely from Widrow and Kollár's amazing classical exposition of quantization in their textbook (Widrow & Kollár). However, as this exposition is quite dense, I attempt to structure and summarize key ideas and equations, and having gone through most of the derivations myself, provide a little of my own commentary. I then design and run a series of experiments to verify the theory in the ML paradigm and identify extensions and limitations.

To narrow our problem scope even further , for now we just analyze the process of weight quantization. In general, weights play much nicer than activations (more stable, similar in distribution across layers), and make for a good playground to test our theory. The standard quantization procedure for model weights is symmetric quantization, which we describe briefly next.

Symmetric per-channel weight quantization

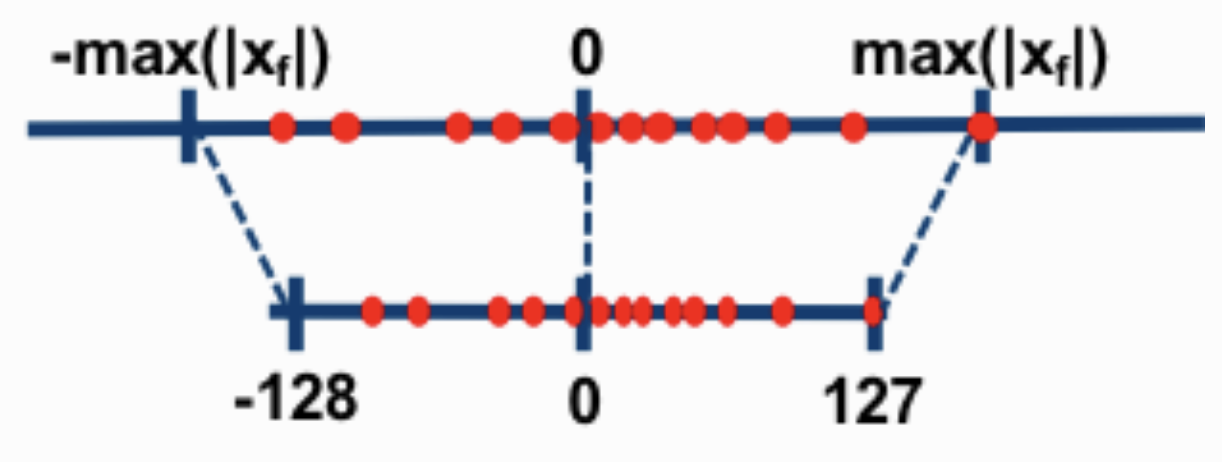

We can see how symmetric quantization translates full precision values to integer bins from Figure 1. This approach allows 0 to map to 0, so we no longer need a zero-point parameter.

Figure 1. A visual of symmetric integer quantization. Image credit: Intel Labs.

The quantization grid size

Given our grid size, we can calculate our quantized values as:

We're working with autoregressive language models, which primarily rely on the transformer architecture. As a result, the term 'per-channel' doesn't make too much sense, since we're no longer working with image channels. Instead we define each output neuron as a channel, which means each row of our weight matrix

Modeling the Quantization Error

So given our quantization approach, what does the error induced by quantization look like? Notice from above that the lossy operation here comes from rounding. So we know that whatever quantized value

However, a key detail about quantization is given the scheme and our input signal

Although the road ahead seems unclear, we can thankfully turn to the hard work done by electrical engineers over the last century to guide our understanding of quantization error. We'll find that there is still a lot we can learn from the rough approximation of uniform noise.

Before we go further, I found myself re-visiting similar topics while learning about this, so I'm writing out some key nuggets of knowledge about the Fourier Transform that we will refer to frequently.

Important Notes about Fourier Transforms (FT)

- The Fourier transform represents a different (but entirely equivalent) view of a given function

. It's easier to think about it in terms of time signals and frequencies, so let's say we have a time signal (e.g., an audio recording) [5]. We can interpret the FT , as the limit of a Fourier series - given our basis function as the standard sine wave, what combination and proportion of frequencies ( ) do we need to add to get our original signal? The general expression for the transform is:

- One of the most elegant results from Fourier theory is the Nyquist sampling theorem. Simply put, suppose the maximum frequency contained in

is Hz. You wish to sample the signal to compress it, so you sample it at a frequency of Hz. So long as Hz, you can fully recover the original signal! In practice, this means you can tremendously compress the signal without loss, allowing for much faster computation and reduced memory footprint. - The probability distribution function (PDF) of a random variable

has an equivalent representation , known as its characteristic function. The transformation is just the Fourier transform of , and the transformation allows one to compute moments of much more easily. You can see an example of this as follows:

Similarly, for higher order moments,

Modeling the Quantization Process

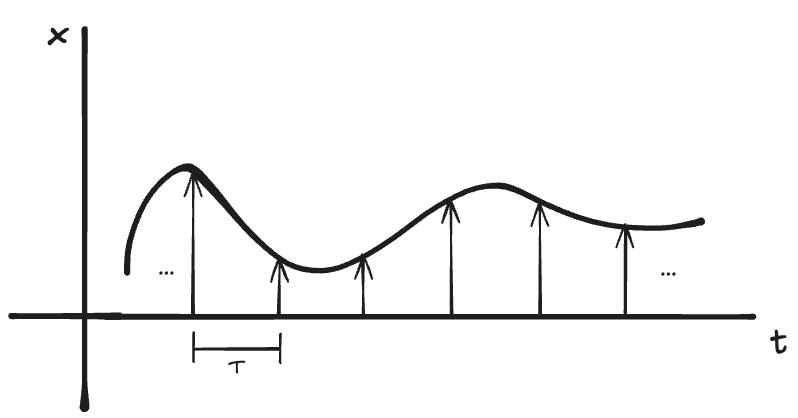

Now, how can we represent the process of quantization? Let's look at sampling a signal (Figure 2). This idea is visually similar to quantization, but we want to bin values, not just sample them. Our problem is that the quantization process is deterministic given the input signal. This makes modeling the process theoretically to be quite tricky, as its entirely contingent on the signal. Following Woodrow and Kollàr, let's instead model the quantization process on the distribution from which the signal is drawn, treating a specific signal as a sample drawn from the distribution[6].

Figure 2. A signal being sampled at a frequency of 1/T Hz.

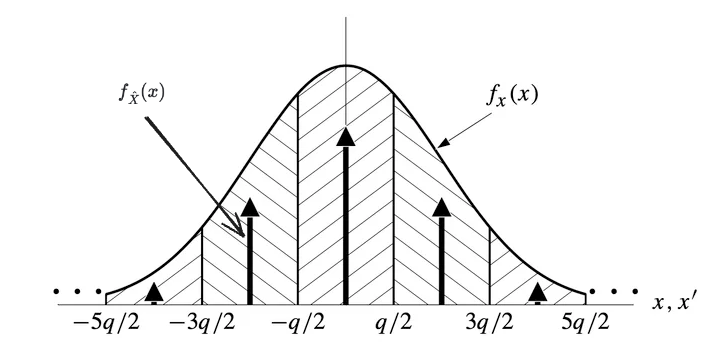

Suppose we start with the PDF

Figure 3. An example input signal distribution, and the corresponding distribution for the quantized output

Observing that this looks identical to sampling (with the modification that we're sampling areas rather than points on

Now, squinting at this closely brings out the key insight. If we were to convolve

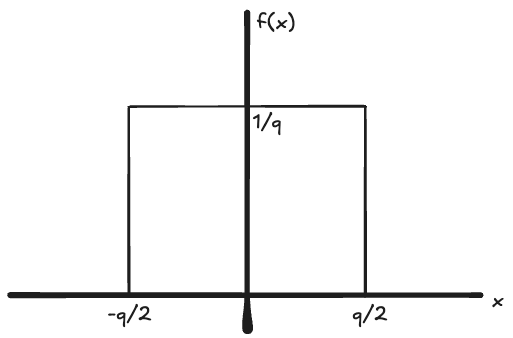

Figure 4. A graph of the uniform distribution, equivalent to a normalized window function.

Convolving

Sampling this function at integer intervals of

Taking a step back, we now have an elegant formulation of the quantization process, and a method to find the PDF of a quantized signal

Two Key Properties of Quantization

Given our treatment of

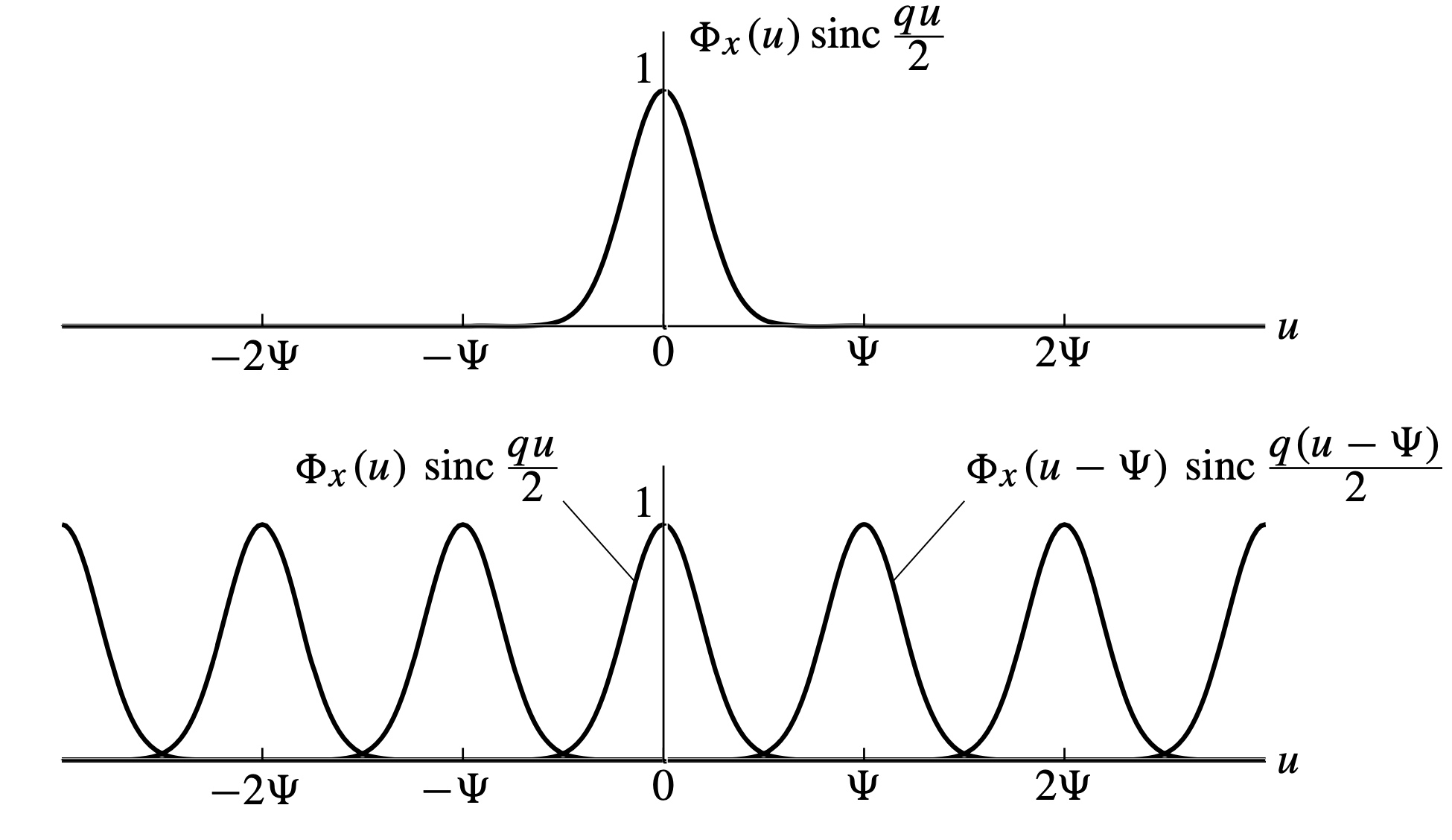

The form of this equation is classic to sampling theory; the sampled function's Fourier transform (

Figure 5. The characteristic function

Now, the following quantization theorems (QTs) follow intuitively:

- QT I: If

for , we can fully recover our input distribution given our quantized distribution. This theorem is identical to the Nyquist sampling theorem, and simply states that if our quantization grid is small enough, we can completely recover our input signal from the quantized signal. We can see this directly from Figure 5. If the replicas are spaced far apart enough, we can use a low-pass filter to snatch the replica at , and recover our input PDF. - QT II: If

for , we can fully recover moments of from moments of . From Figure, this is as if the replicas overlapped but not enough to affect the curvature of at , so the derivatives of match the moments of . Furthermore, we'll soon see that if QT II holds, our quantization error follows the uniform noise model. Note that if QT I holds, QT II automatically holds.

Theoretical Quantization Error

To start off modeling

Here,

Next we compute our characteristic function for the error. This follows (Widrow & Kollár) chapter 5.1 for the mathematical derivation. At a high level, the product in the input domain leads to a convolution in the frequency domain between

Taking the inverse Fourier transform of the characteristic function gives us a more powerful representation for the error distribution:

In both cases, notice the dependence on

When QT I or QT II is satisfied, we see that all the non-zero integer multiples of

A key input distribution that we want to look at is the Gaussian. It's been observed and hypothesized many times that the pre-trained weight matrices fit to this distribution closely in LLMs, so it'll be useful to explore its properties under quantization further (Dettmers et al, 2023). Let's take a look at the characteristic function for a Gaussian, given its PDF:

Taking a quick look at this, we realize it doesn't fit any of the QTs as

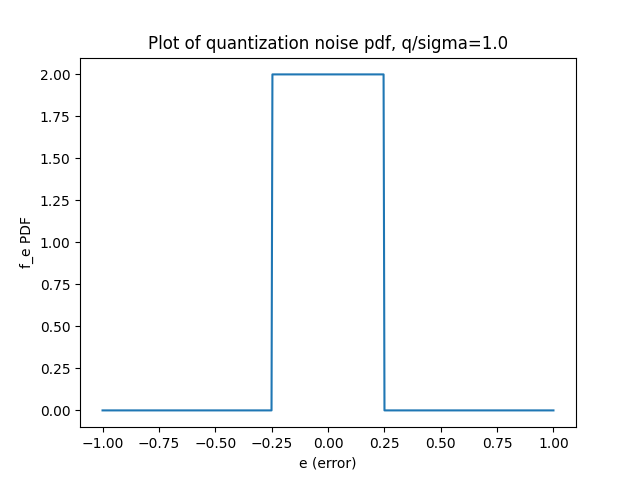

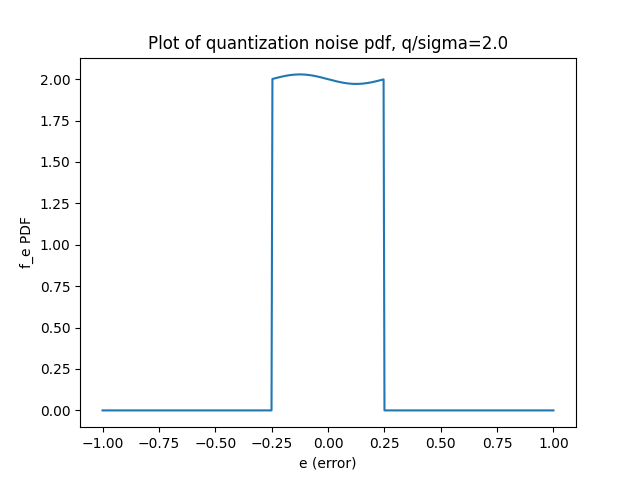

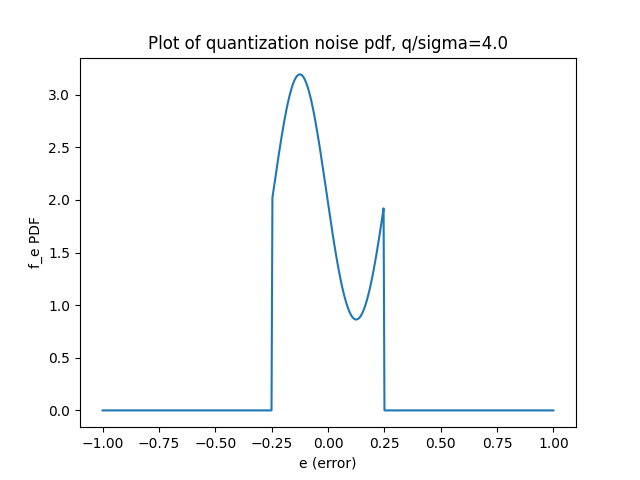

We can use the Jacobian elliptic theta function to numerically calculate this PDF for different values of

As the equations show, the key value to pay close attention to is the ratio of

|

|

|

|---|---|---|

| (a) | (b) | (c) |

Figure 5. Plots of theoretical quantization noise with increasing grid sizes (decreasing granularity). The distribution moves away from uniform once

An interesting point to note is the sinusoidal behavior in the error distribution. It's only noticeable for large grid sizes, and mathematically, this stems from the

As a first-pass and sanity check of our model, we sample gaussian data and compute the quantization error statistics, comparing it to the theoretical results. Below is a table summarizing the results. With a large sample size (

| Theory mean | Empirical mean | Theory std dev | Empirical std dev | |

|---|---|---|---|---|

| 1 | 0 | 0 | 0.144 | 0.144 |

| 2 | -0.001 | -0.001 | 0.144 | 0.144 |

| 4 | -0.046 | -0.046 | 0.116 | 0.136 |

Experiments on Llama-7b

We've built up a good understanding of the theory, but how well does this actually apply to deep neural networks? Typically, real-world signals rarely follow a clean distribution that we can just plug-and-play with, but we're partially saved by the following observation: most neural network weights are normally distributed. If this is true, we can use our theory to directly model the quantization error, at least due to weight quantization.

Now, to be honest, beyond seeing this observation cited in papers every now and then, I haven't been able to find good verification as to why this hypothesis exists. Weights are generally initialized from a normal distribution, but I've always assumed the distributional shift over training would change their structure significantly. While I'm still digging for a good explanation of this, we can take inspiration from (Dettmers et al, 2023) and simply run significance tests on all our weight tensors to validate the claim.

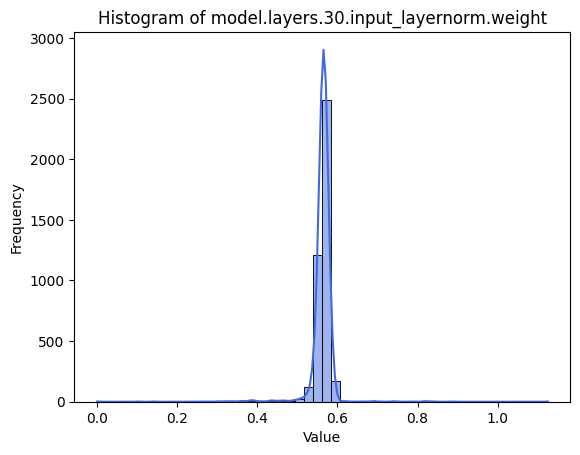

Now let's look at Llama-7b. We select Llama-7b as it's an open-sourced and well-studied model, and is large enough to be respectable in today's world, but not so large that experiments become expensive to run. As Dettmers shows, a lot of interesting results occur at the 6.7B+ parameter range, so we don't want to pick too small of a model. We follow their approach to look at per-channel weights, and find that they're reasonably gaussian. Running the Shapiro-Wilk test with a 5% significance level tells us that 85% of weight channels are approximately gaussian[7] (Dudley, 2012). This is slightly different from Dettmers' reported result of 92.5%, although differences in model versions used could account for this. We note that all the Layernorm weights fail this test, and plotting some of their histograms indicate a far-from Gaussian shape - all of them have extreme outliers, as demonstrated by the large kurtosis (see Figure 6). Unlike linear layers, Layernorm weights are initialized all to ones, so this likely affects the distribution over training to be further from gaussian. This initialization also means the center of the distribution is no longer 0, so our symmetric quantization scheme would end up wasting a lot of bins that are empty.

Figure 6. A histogram of a selected Layernorm. The distribution's skewness is -2.5, and excess kurtosis of 103.2.

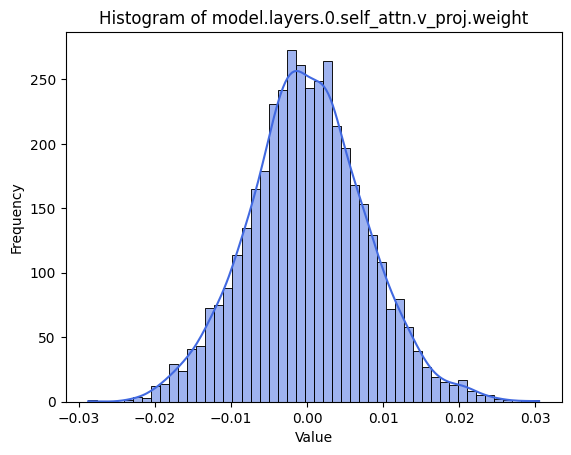

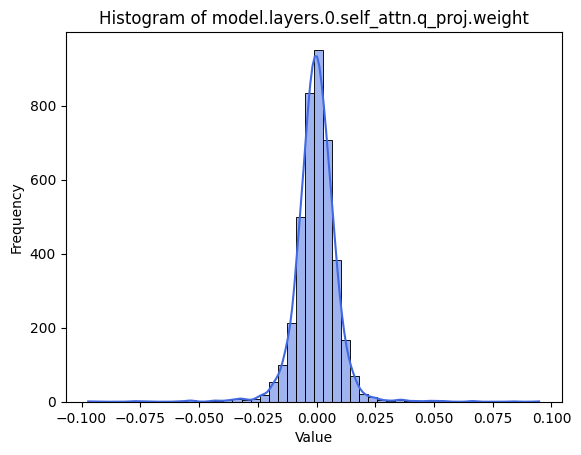

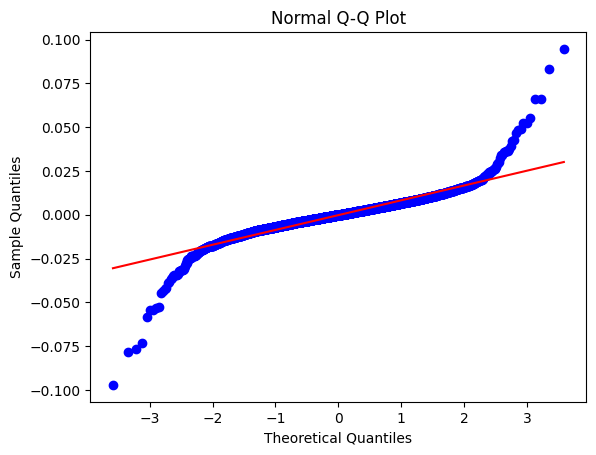

Among the remaining parameters, we note that they all have a symmetric, bell-shaped curve (see Figure 7). Let's take a look at an example failure mode of weights that don't fit the gaussian. The outliers aren't as extreme as in the case of the Layernorm - we can visualize the outliers-induced heavy tails via the q-q plot of the data relative to a theoretical gaussian distribution. The story of the outlier is incredibly interesting in the quantization space, and one that I'll dive into in my next post. For now, however, we posit that these outliers are minor enough to continue using the gaussian model, and proceed with our attempt to predict quantization error using our model.

|

|

|

|---|---|---|

| (a) | (b) | (c) |

Figure 7. In (a) A histogram of a selected Attention weight matrix that fits to a gaussian. The distribution's skewness is 0.1, and excess kurtosis of 0.2. In (b), an example weight distribution that doesn't fit to a gaussian distribution, and its corresponding q-q plot (c). The tails are heavier than expected due to outliers, but not as extreme as Layernorm weight distributions. Here, the skewness is still small at -0.2, but the kurtosis is larger, at 16.7.

For each weight channel, we compute the mean and standard deviation as follows, and set the theoretical distribution to be

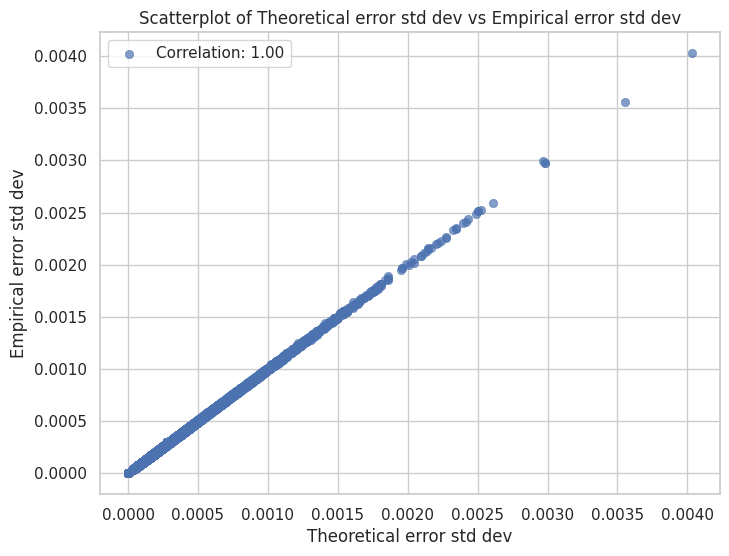

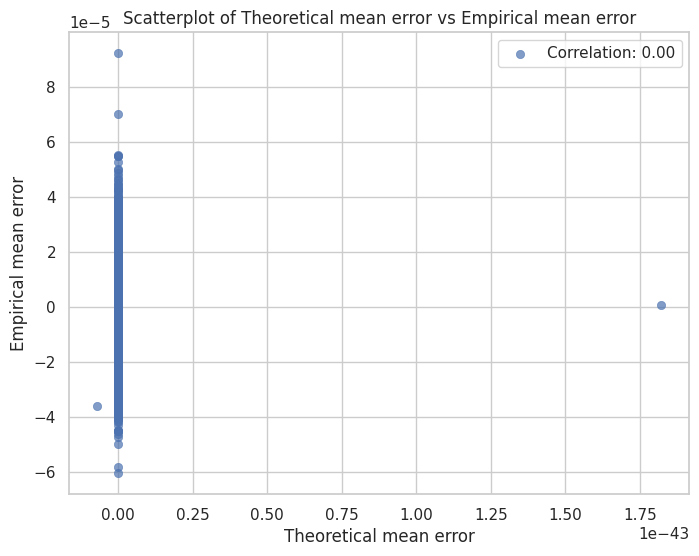

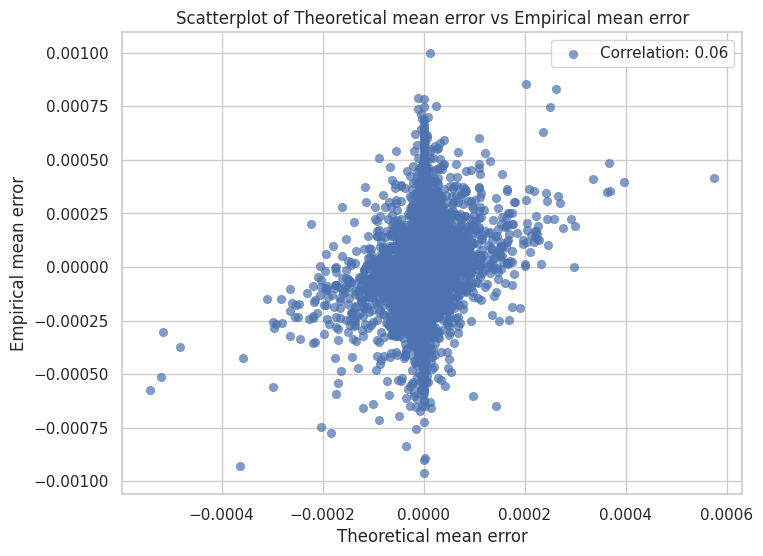

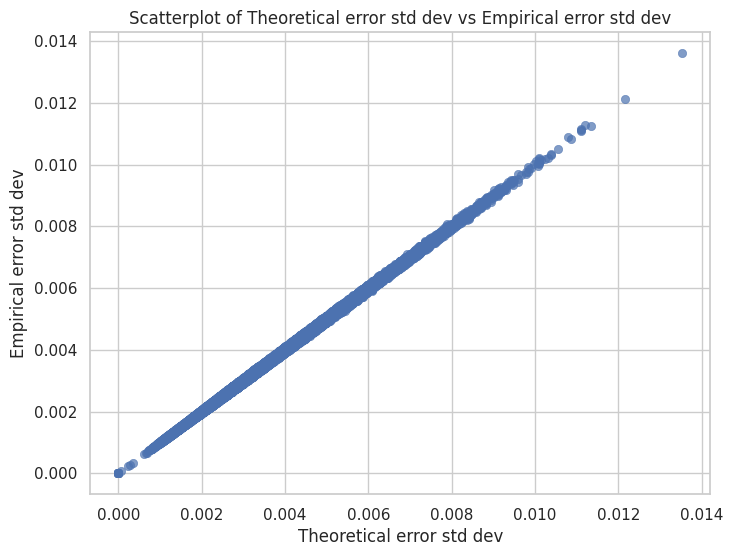

We compute the error moments for all the non-Layernorm layers, and plot these in Figure 8a and 8b, which show scatterplots with theoretical standard deviation and mean error vs empirical results. We find that our model explains the variance of quantization error quite well!

|

|

|

|---|---|---|

| (a) | (b) | (c) |

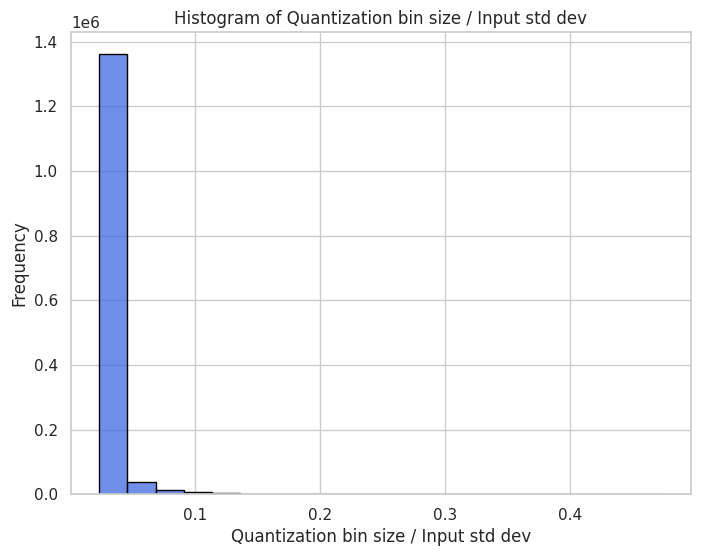

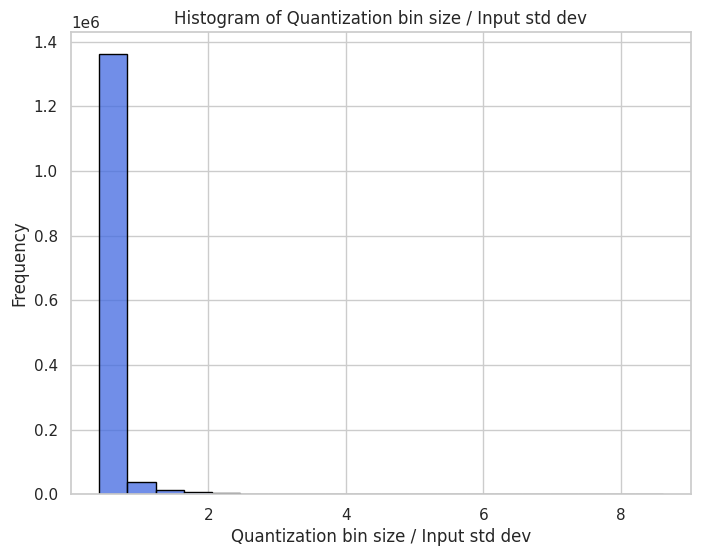

Figure 8. Plots of theoretical predictions of the quantization noise statistics against empirical results. Here,

We also try testing the empirical standard deviation against that predicted by purely uniform noise and continue to observe a perfect correlation. Given the small

However, the prediction of the mean of the error is much poorer and spread out, likely due to the asymmetry of the actual values that the gaussian model doesn't account for. Nevertheless, the error is quite small (~1e-5). We also observe that most of the channels have small quantization grid sizes relative to the input variance, as seen in Figure 8c.

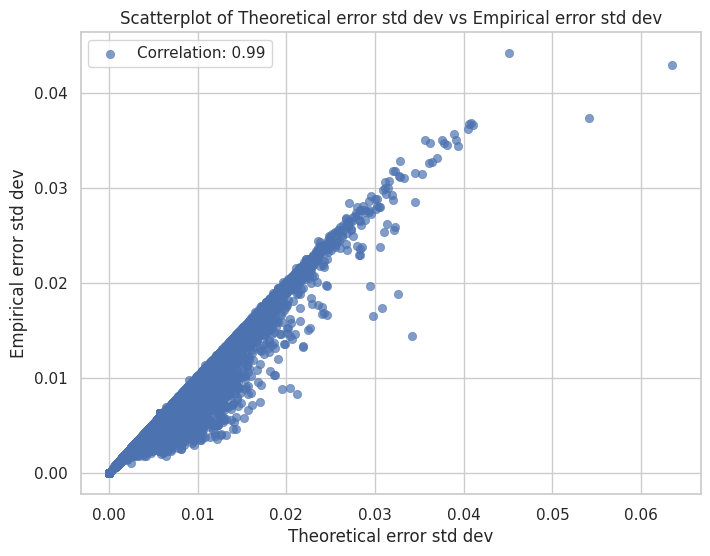

Next, let's take a look at the 4-bit quantization case. Seeing Figure 9, we observe that the theoretical error variance prediction predicts the empirical values well, but consistently underestimates them. This is a really interesting find that we'll come back to. In the meantime, we also see that the mean of error predictions are just as bad as in the 8-bit case, if not worse. The positive correlation is vaguely present, and most of the mean errors do fall close to 0. The worst gap in prediction is on the order of 1e-3, which is still relatively small but worryingly larger than in the 8-bit case, where the biggest gap was 8e-5.

Finally, we note from Figure 9c that the quantization grid sizes are larger relative to the input variance than in the 8-bit quantization case. In a few cases the bin size can be as large as three times the standard deviation.

|

|

|

|---|---|---|

| (a) | (b) | (c) |

Figure 9. Plots of theoretical predictions of the quantization noise statistics against empirical results. Here,

Now, let's come back to the interesting underestimate in Figure 9a. Initially we thought our approximations for and were too steep, so we tried adding some more terms from the infinite series (see section 11.9 in (Widrow and Kollár et al)). However, this didn't change the results at all. Investigating more closely, we hypothesize the underestimation is likely due to distributional differences between the actual data and the normal curve. As we've seen, the weights generally have heavier tails (due to more outliers) than the gaussian distribution would predict. With fewer output bits, the bin/grid size gets bigger, so a lot of the outliers get dumped into the same bins as the 'in-distribution' values, causing the empirical error to increase. Since our model distribution doesn't have these tails, we underestimate the error.

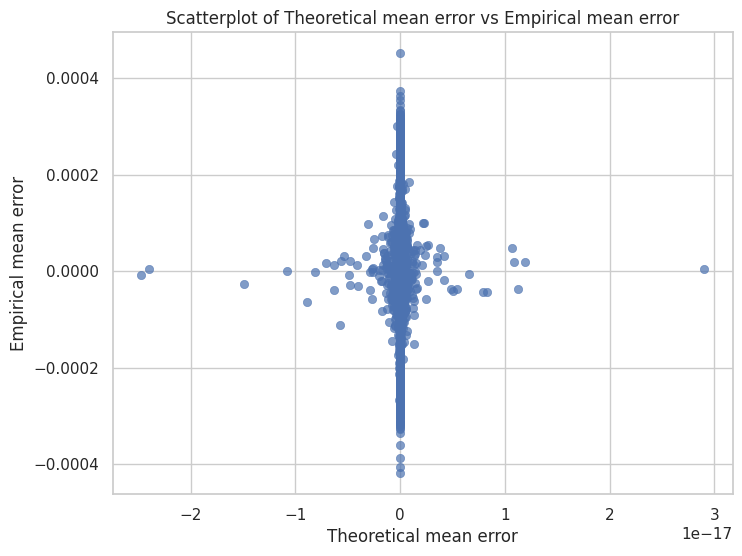

To test this theory, we try re-running the predictions excluding the heaviest tail channels. This eliminates the ~15% of the weight channels that failed the normality test. Our results in Figure 10a show a far improved correlation, reinforcing our theory. Interestingly, we see a return to a bias towards predicting 0 as the theoretical error mean, shown in Figure 10b.

|

|

|---|---|

| (a) | (b) |

Figure 10. Removing the channels that failed the normality test, we find that the correlation between theory and experiment is much closer in the

So, what have we learnt from this analysis? First, we find that although the data structures don't satisfy all the assumptions we made in theory (IID gaussian), our general noise model does a pretty good job predicting the variance in empirical quantization error. We also found that it's much more sensitive to predicting the mean error, however, showing the gaps between our assumptions and reality.

Now, although the fit between theory and experiment is promising, one look at equation (2) gives me the shivers. Once we bring in a formulation for activation quantized error, modeling the error propagation through a network is going to be gnarly. What we really want to know is if we can use the PQN model to substitute the error with uniform noise. Based on the last part of our theory section, we know this all hinges on the critical

A couple of key hidden details in our experiments

A critical difference between quantizing real model weights and working in theory is our quantization bins are no longer infinite. Depending on the scheme we use, we could encounter clipping, which introduces an entirely new form of error. However, since we're using min-max quantization, we don't have to worry about not finding a nearest bin for any value. Thus, we treat the weight tensor as if it has an infinite quantization grid. Nonetheless, we can expect discrepancies between our results due to this difference.

Also, since we're using symmetric quantization, our quantization grid is centered at 0. If it wasn't we would need to add a phase shift to our results[8]

On the Applicability of the PQN Model

Given the data is normally distributed, when does our uniform noise approximation break down? Let's explore the key ratio

From the previous section, given an inputs are

So when will

It's easy to see that when

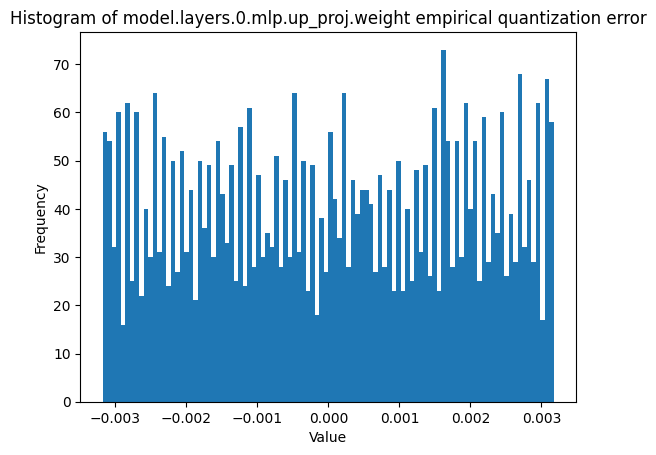

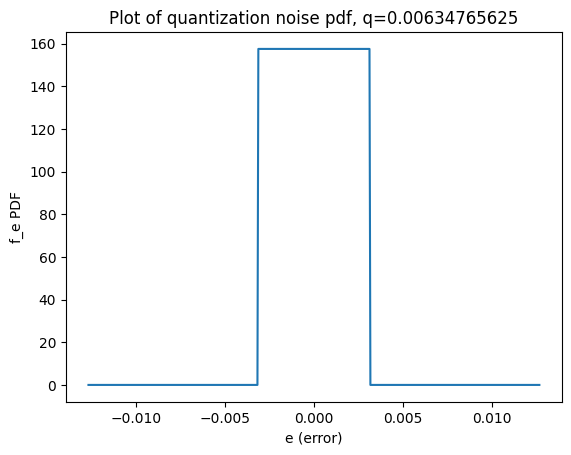

For attention projection matrices in LLaMA under 8-bit per-tensor quantization,

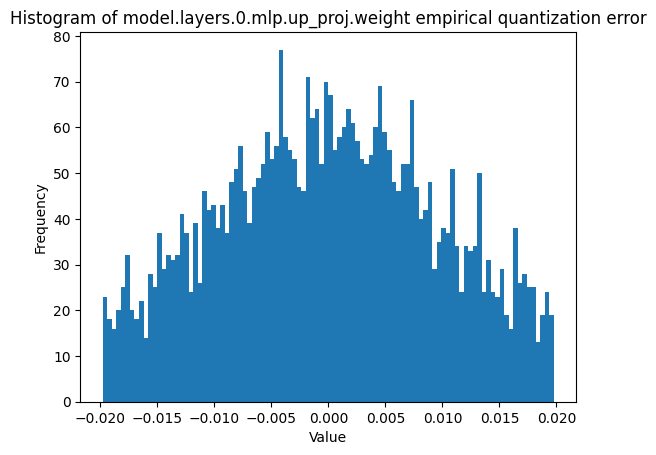

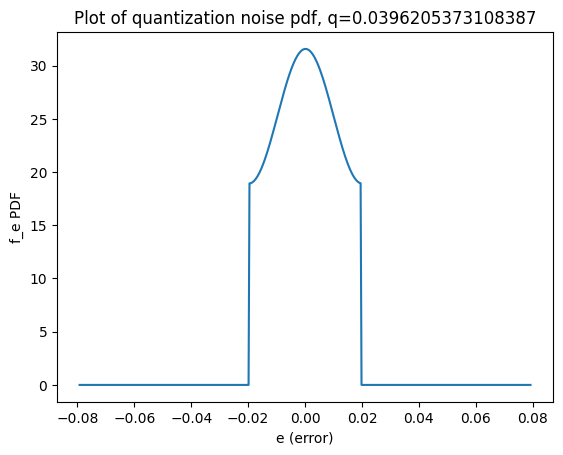

Alternatively, if we're quantizing to smaller bit sizes,

|

|

|---|---|

| (a) | (b) |

Figure 11. A side-by-side of theoretical and empirical error distributions for a particular weight channel, under 8-bit quantization. This is with

|

|

|---|---|

| (a) | (b) |

Figure 12. A side-by-side of theoretical and empirical error distributions for a weight channel, under 4-bit quantization. This is with

From these discussions and Figures 8c and 9c, we can take away the following: the uniform noise model (PQN) is surprisingly robust, especially in the case of per-channel weight quantization. The breakdown of the model in the per-channel case depends entirely on the presence of outliers that can skew the distribution tails. These seem to occur rarely (in the 4-bit case, only about 0.53% of weight channels have

Conclusions

To wrap up, let's list our overall findings:

Theory:

- The quantization error model is built on modeling the input distribution of values. From this we can formulate a process that gives us the distribution of quantized outputs and errors, letting us calculate error statistics for actual data.

- Under certain assumptions, we can mimic the process as if we were adding uniform noise to our data. This is known as the PQN model (pseudo-quantum noise)

- Although the gaussian distribution doesn't satisfy the key assumptions of this PQN model, when the quantization grid is small enough, we can effectively still use the PQN model.

In our experiments, there are two axes that we're using to see if theory works well in practice. The first is to see if the gaussian approximation to weights give reasonable statistics. The second is to see if the stronger assumptions for the PQN model give reasonable statistics in practice.

Experiment:

- The Gaussian approximation to weights let us use our theoretical model works well to model second-order statistics for our quantization error, and gives a rough approximation to first-order statistics as well.

- We find that when there aren't too many outliers, the PQN model works great to model the noise. This can simplify the overall analysis tremendously since we can just use uniform noise additions rather than propagating our error distribution through the network.

Now, how can we expand this? An immediate thought is to test it on activations. Unfortunately, unlike weights, activations don't follow a particular distribution, significantly complicating our analysis. However, we might still be able to use the ideas here to capture activation error propagation through the model. We could try looking at how the weight errors cascade through the network to deviate activation values.

Another area to explore is when catastrophic model degradation occurs - does it happen through a series of accumulated smaller quantization errors, or a few large errors with outliers? If it's the latter, modeling the distribution becomes much harder since we need to capture the presence of such outliers.

I hope to follow up with this soon!

Thanks for reading to this point! I'm always looking for feedback, and would love to hear some if you have any. Stay tuned for future posts!

Footnotes

People who like to quantize ↩︎

One approach is to study the loss landscape under quantization, like done here. ↩︎

As opposed to low-precision floating point quantization, a new area of research especially interesting for efficient model training. ↩︎

To be clear, the research cited above provide their own justifications for doing so - they definitely don't take the the substitution of quantization error with uniform noise lightly. ↩︎

The process for continuous and discrete signals is mostly the same. ↩︎

The authors argue that the distribution approach lends itself to some neat properties that apply directly to real signals, which we'll soon see. However, it's worth noting that in the real world, often it is difficult to identify a signal's 'parent distribution'. This limitation will be a major obstacle when we look at activation distributions, and when we start exploring quantization in the training pass. ↩︎

We note that a significance test can really only tell us when there is enough evidence to reject the null or not reject the null hypothesis (in this case, the null is that the data is drawn from a Gaussian distribution). So all the test really says is there isn't sufficient evidence that the data is not drawn from a gaussian. I've tried using a variety of tests, but the Shapiro-Wilk test is the most straightforward and widely used test for normality. ↩︎

Generally this isn't a cause for concern, as quantization schemes always include 0 in the grid - otherwise, zero-padding and other operators can induce catastrophic errors into the model. ↩︎

N =

, C = = 127 for 8-bit integer quantization. Here we're doing per-tensor quantization to make large enough. ↩︎